Abstract

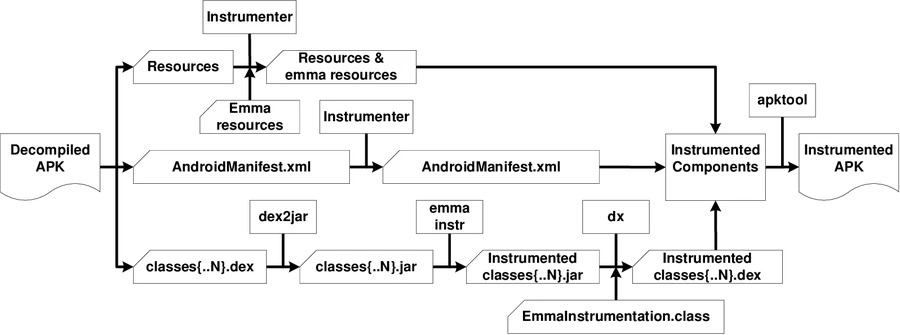

Many state-of-art mobile application testing frameworks (e.g., Dynodroid, EvoDroid) enjoy Emma or other code coverage libraries to measure the coverage achieved. The underlying assumption for these frameworks is availability of the app source code. Yet, application markets and security researchers face the need to test third-party mobile applications in the absence of the source code. There exists a number of frameworks both for manual and automated test generation that address this challenge. However, these frameworks often do not provide any statistics on the code coverage achieved, or provide coarse-grained ones like a number of activities or methods covered. In the same time, given two test reports generated by different frameworks, there is no way to understand which one achieved better coverage if the metrics used are different (or no results for coverage were provided). To address these issues we designed a framework called BBoxTester that is able to generate code coverage reports and produce uniform coverage metrics in testing without the source code. Security researchers can automatically execute applications exploiting current state-of-art tools, and use the results of our framework to assess if the security-critical code was covered by the tests. In this paper we report on design and implementation of BBoxTester, and assess its efficiency and effectiveness measuring achieved code coverage for 3 simple automated testing strategies.